In 1950, the mathematician Alan Turing put forth this question. Rather than attempt to answer it using conventional logic, he proposed a new disruptive model–the Imitation Game.

The Problem

One can look at Alan Turing (1912-1954) as the “father of theoretical computer science and artificial intelligence.” His contributions to modern computer science cannot be understated. He posited whether computers could one day have the cognitive capabilities of humans. Some argue that day has arrived. Yet, how do we know?

The Turing Game

The Imitation Game is played by three people (humans).

(A) a Man,

(B) a Woman, and

(C) an Interrogator (of either gender)

- The Interrogator, segregated into a separate room, is to determine which of the two players is the man and which is the woman.

- The interrogator askes the two players (known only as “X and Y” or “Y and X”) a series of questions, the answers to which are written or passed through an intermediary so as not to expose the player’s gender.

- The role of Player (B) is to assist (C) determine the gender of (A), while (A) is to deceive (C).

However,

- “What will happen when a machine takes the part of A in this game? Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman? These questions replace our original, Can Machines Think?“

In his paper, Turing goes to elaborate in detail but for our purposes, the bottom line.

Will the error rate from a human only decision process be the same as when a machine (digital decision maker) become one of the players?

Early AI and the Turing Test

In 1955, McCarthy and Shannon, along with Marvin Minsky and Nathaniel Rochester, defined the AI problem as, “that of making a machine behave in ways that would be called intelligent if a human were so behaving. In 2013, when asked about Turing’s test in a taped interview, Minsky said, ‘The Turing test is a joke, sort of, about saying a machine would be intelligent if it does things that an observer would say must be being done by a human.'” This materially connects the early definition of the AI problem to Turing’s test.

Our intent here is not to split academic hairs but to put forth this concept that predates most readers and is typically not a subject of serious discussion. The point being that the problem was documented 75 years ago or earlier and this pioneering thinking is the basis of our contemporary definition and implementation of Artificial Intelligence.

Before Turing

In one sense, we all stand on the shoulders of giants who preceded us. “When you think about the origins of computer science, the name Ada Lovelace might not come to mind immediately—but it should. Born in 1815, Ada Lovelace was an English mathematician and writer whose visionary work laid the foundation for modern computing. Collaborating with Charles Babbage (considered to be the father of computing), the inventor of the Analytical Engine, Lovelace wrote what is widely recognized as the first algorithm designed for a machine.”

“Ada was the first to explicitly articulate this notion and in this she appears to have seen further than Babbage. She has been referred to as ‘prophet of the computer age‘. Certainly, she was the first to express the potential for computers outside mathematics.” In the computer Familia, we might also want to think of her as the grandmother of computing.

Other women who played a major role in the evolution of Artificial Intelligence (after Turing) include Navy Rear Admiral Grace Hopper, the inventor of the first compiler for a programming language as well as other innovations. Many others made significant contributions. No doubt women will continue to play a vital role with this game changing technology.

The Solution(s)

Twelve years have passed since Minsky’s statement that the Turing test is a joke. Today’s artificial intelligence capability has changed that landscape.

The argument becomes, not can ‘we’ meet the Turing test, but how far and fast will it be eclipsed. This suggests exciting times with associated challenges and risks.

Contemporary Thinking about the Test

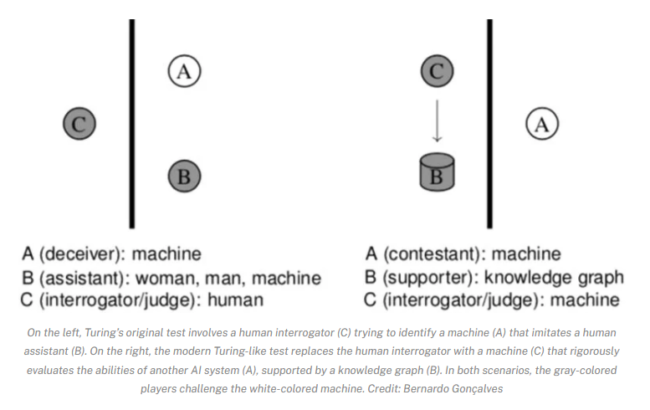

“As AI systems are increasingly deployed in high-stakes scenarios, we may need to move beyond aggregate metrics and static benchmarks of input–output pairs, such as the Beyond the Imitation Game Benchmark (BIG-bench). We should be prepared to evaluate an AI’s cognitive abilities in a way that resembles the realistic settings in which it will be used. This can be done with modern Turing-Like Tests.” As shown in the following figure.

“Looking ahead, Turing-like AI testing that would introduce machine adversaries and statistical protocols to address emerging challenges such as data contamination and poisoning. These more rigorous evaluation methods will ensure AI systems are tested in ways that reflect real-world complexities, aligning with Turing’s vision of sustainable and ethically guided machine intelligence.”

Computer Game Bot Turing Test

“The computer game bot Turing test is a variant of the Turing test, where a human judge viewing and interacting with a virtual world must distinguish between other humans and video game bots, both interacting with the same virtual world. This variant was first proposed in 2008 by Associate Professor Philip Hingston of Edith Cowan University, and implemented through a tournament called the 2K BotPrize.”

This pundit believes that the Turning test dam has been broken, and greater things lie ahead.

Today’s Father of AI – Geoffrey Hinton, The Nobel Prize in Physics 2024

“When we talk about artificial intelligence, we often mean machine learning using artificial neural networks. This technology was originally inspired by the structure of the brain. In an artificial neural network, the brain’s neurons are represented by nodes that have different values. In 1983–1985, Geoffrey Hinton used tools from statistical physics to create the Boltzmann machine, which can learn to recognize characteristic elements in a set of data. The invention became significant, for example, for classifying and creating images.”

Together with John J. Hopfield, they used physics to find patterns in information. Dr. Hinton has expressed some concerns regarding his (AI) child as he states in the following interview from October 9, 2023.

Theoretical Basis of Tests

In this pundit’s opinion, the Turing test used Game Theory as a fundamental underpinning. A later theory, Relationships, Behaviors and Conditions enables newer derivatives of the original Turing Test as well as supports different approaches to the problem. These theories are briefly described.

Finally, it is not necessary to read this section, as these details are provided for completeness and to support the position taken. We understand that this level of detail is not for every reader.

Over the past few years, there has been an impassioned argument regarding ‘The Science.” We addressed this issue in 2020, and the following paragraph is taken from that Blog, They Blinded Me with Science.

“According to Scientific American, Scientific claims are falsifiable—that is, they are claims where you could set out what observable outcomes would be impossible if the claim were true—while pseudo-scientific claims fit with any imaginable set of observable outcomes. What this means is that you could do a test that shows a scientific claim to be false, but no conceivable test could show a pseudo-scientific claim to be false.

Sciences are testable, pseudo-sciences are not.”

There is academic peer reviewed agreement that both Game Theory and RBC hypotheses are testable.

Game Theory

Concurrent with Turing’s Imitation Game development, game theory was being formalized as an approach towards economic behavior modeling among economic ‘rational’ actors.

“Game theory emerged as a distinct subdiscipline of applied mathematics, economics, and social science with the publication in 1944 of Theory of Games and Economic Behavior, a work of more than six hundred pages written in Princeton by two Continental European emigrés, John von Neumann, a Hungarian mathematician and physicist who was a pioneer in fields from quantum mechanics to computers, and Oskar Morgenstern, a former director of the Austrian Institute for Economic Research. They built upon analyses of two-person, zero-sum games published in the 1920s.” This treatise was developed from the works of other pioneers of the 1920s and 1930s.

An interesting side note, “The software industry is a little over half a century old (in 2005), but its roots date back to the textile loom programming of the seventeenth century that powered the Charles Babbage Difference Engine. In 1946, ENIAC (Electronic Numerical Integrator and Computer), the first large-scale general-purpose electronic computer built at the University of Pennsylvania, ushered in the modern computing era.

That same year (1946), John von Neumann coauthored a paper, Preliminary Discussion of the Logical Design of an Electronic Computing Instrument. The von Neumann general purpose architecture defines the process of executing a continuous cycle of extracting an instruction from memory, processing it, and storing the results has been used by programmers ever since.“(1)

Perhaps, this is part of the collision of the two major breakthroughs: Game Theory and the modern Computer Architecture.

In 1996, this author’s doctoral dissertation, Cross Cultural Negotiations between Japanese and American Businessmen: A Systems Analysis, (Exploratory Study) was “An exploratory test of this framework in the context of two-person zero-sum simulated negotiation between Japanese businessmen and American salesmen, both living and working in the United States. The integration of structural (game theory) and process theories (RBC) into a dynamic systems model seeks to better understand the nature of complex international negotiations. Advanced statistical techniques, such as structural equation modeling are useful tools providing insight into these negotiation dynamics.”

This work is the basis for the Cloud based Serious Games used to train Cross Cultural Teams.

Relationships, Behaviors and Conditions (RBC) Framework

This model has been part of numerous this pundit’s writings since 1996. A brief overview from a 2011 article follows.

“The Relationships, Behaviors, and Conditions (RBC) model was originally developed to address issues around cross cultural (international) negotiation processes. Relationships are the focal point of this perspective, reflecting commonality of interest, balance of power and trust as well as intensity of expressed conflict.

Behavior in this model is defined as a broad term including multidimensions – intentional as well as unintentional. Finally, Conditions are defined as active and including circumstances, capabilities and skills of the parties, culture, and the environment. Of course, time is a variable in this model as well.

One key feature of the R B C Framework is its emphasis on interactive relationships while providing an environment for multiple levels of behavioral analysis. This makes it a useful tool to better understand the new regulatory processes currently unfolding. As we will see later, the number of constituents now engaged belays the use of simplistic linear decision models.”(2)

Operational Excellence

The following excerpt from our 2017 Blog, Excellent Behaviors: Assessing Relationships in the Operational Excellence Ecosystem addresses the role of the RBC Framework in organizational excellence.

“One of the hot business buzzwords of 2017 is “Operational Excellence.” It has been the subject for many pundits, including this one.

In October and November we published a two-part series, Assuring Operational Excellence from Contractors and Their Subcontractors through BTOES Insights. Each part included a link to additional information.

The October edition featured an excerpt of our Implementing a Culture of Safety book. In the November edition we released our new Best Practice solution, Attaining & Sustaining Operational Excellence: A Best Practice Implementation Model. We are proud to make it available herein and in general.

One of the basic tenets of the RBC Framework is the general construct that Relationships cannot be determined a priori. The well-used example is a man and a woman sitting on a bench at a bus stop. Are they married, siblings, coworkers, friends or simply two people waiting to catch the same/different bus?

Their relationship cannot be known directly. However, their Behaviors will provide insight into how they relate to each other. Romantic behavior may indicate marriage, dating, an affair etc. They may still be coworkers but most likely are not strangers.

The third dimension, Conditions (environment) can be considered the stage upon which behaviors play. So, what does this have to do with Operational Excellence?

Another component of our digital environment is Human Systems Integration (HSI). In our forthcoming book, we have defined HSI as, “Human Systems Integration (HSI) considers the following seven domains: Manpower, Personnel, Training, Human Factors Engineering, Personnel Survivability, Habitability, and Environment, Safety and Occupational Health (ESOH). In simple terms, HSI focuses on human beings and their interaction with hardware, software, and the environment.”

We have crossed the Turing Rubicon. How will your organization capitalize on these Opportunities?

Hardcopy References

- Shemwell, Scott M. (2005). Disruptive Technologies—Out of the Box. Essays on Business and Information Technology Alignment Issues of the Early 21st Century. New York: Xlibris. p. 127.

_______ (2011, January). The Blast Heard Around the World. Petroleum Africa Magazine. pp. 32-35.

Pre order our new book

Navigating the Data Minefields:

Management’s Guide to Better Decision-Making

We are living in an era of data and software exponential growth. A substantive flood hitting us every day. Geek heaven! But what if information technology is not your cup of tea and you may even have your kids help with your smart devices? This may not be a problem at home; however, what if you job depends on Big Data and Artificial Intelligence (AI)?

Available April 2025

For More Information

Please note, RRI does not endorse or advocate the links to any third-party materials herein. They are provided for education and entertainment only.

See our Economic Value Proposition Matrix® (EVPM) for additional information and a free version to build your own EVPM.

The author’s credentials in this field are available on his LinkedIn page. Moreover, Dr. Shemwell is the coauthor of the 2023 book, “Smart Manufacturing: Integrating Transformational Technologies for Competitiveness and Sustainability.” His focus is on Operational Technologies.

We are also pleased to announce our forthcoming book to be released by CRC Press in April 2025, Navigating the Data Minefields: Management’s Guide to Better Decision-Making. This is a book for the non-IT executive who is faced with making major technology decisions as firms acquire advanced technologies such as Artificial Intelligence (AI).

“People fail to get along because they fear each other; they fear each other because they don’t know each other; they don’t know each other because they have not communicated with each other.” (Martin Luther King speech at Cornell College, 1962). For more information on Cross Cultural Engagement, check out our Cross-Cultural Serious Game. You can contact this author as well.

For more details regarding climate change models, check out Bjorn Lomborg and his book, False Alarm: How Climate Change Panic Costs Us Trillions, Hurts the Poor, and Fails to Fix the Planet.

Regarding the economics of Climate Change, check out our blog, Crippling Green.

For those start-up firms addressing energy (including renewables) challenges, the author can put you in touch with Global Energy Mentors which provide no-cost mentoring services from energy experts. If interested, check it out and give me a shout.